Why ERPC VPS Delivers High Performance

Why ERPC VPS Delivers High Performance

When developers begin building applications or bots on Solana, many naturally choose large, general-purpose clouds based on their past experience.

In the Web2 world, these clouds have effectively been the standard, and they have provided sufficient performance.

It is therefore natural to assume that the same approach would be suitable for Solana as well.

In the Web2 world, these clouds have effectively been the standard, and they have provided sufficient performance.

It is therefore natural to assume that the same approach would be suitable for Solana as well.

However, this assumption breaks down significantly for Solana workloads.

Large, general-purpose clouds are designed with versatility and flexibility as their highest priorities, and for workloads like Solana where low latency directly affects outcomes, structural differences become visible immediately.

Large, general-purpose clouds are designed with versatility and flexibility as their highest priorities, and for workloads like Solana where low latency directly affects outcomes, structural differences become visible immediately.

This article explains, in a step-by-step and careful manner, why Solana workloads do not achieve expected performance on large general-purpose clouds, and how ERPC VPS is structured to solve these issues.

Why “cloud slowness” is almost never noticed in Web2

First, most Web2 applications are not as mission-critical as financial applications.

Services such as SNS, e-commerce, business tools, and content delivery can tolerate a certain amount of delay and still function as products.

Services such as SNS, e-commerce, business tools, and content delivery can tolerate a certain amount of delay and still function as products.

For this reason, the following sources of structural latency inside large general-purpose clouds did not surface as issues:

- Multiple virtualization layers (virtual NICs, virtual switches, etc.)

- Internal bandwidth shared among many users

- CPU overcommit (assigning more virtual cores than physical cores)

- Additional processes for billing and monitoring

- Older CPU generations being made available to general users

These mechanisms are necessary for cloud operations, but in Web2 workloads their impact is minor, and there are few opportunities to notice them.

Solana workloads are fundamentally different.

Web3 applications sit “adjacent to finance,” and everything can become mission-critical

Applications built on Solana and other blockchains sit close to the financial domain.

Asset movement, liquidation conditions, price changes, and transaction ordering are all directly tied to outcomes.

Asset movement, liquidation conditions, price changes, and transaction ordering are all directly tied to outcomes.

In particular, market-related workloads require transaction volume and speed far exceeding traditional card-based payments.

Even a few milliseconds of delay can lead to failed execution or worse pricing.

Even a few milliseconds of delay can lead to failed execution or worse pricing.

In addition, Solana’s chain data volume is extremely large; subscribing properly to Shreds, logs, and gRPC events can easily result in several terabytes of data per day.

This is fundamentally different from the typical Web2 traffic profiles that large clouds were originally designed for.

This is fundamentally different from the typical Web2 traffic profiles that large clouds were originally designed for.

In this way, Solana provides no opportunity to hide the structural latency or cost characteristics present inside these clouds.

From the very beginning, these characteristics appear directly as disadvantages or operational costs.

From the very beginning, these characteristics appear directly as disadvantages or operational costs.

Why large general-purpose clouds are not suited for Solana

Below we explain, factor by factor, why large general-purpose clouds are structurally mismatched with Solana’s high-speed requirements.

1. The CPUs available to general users are several generations old

Bare metal servers and VPS (VMs) made available by large clouds typically use CPUs that are several generations behind.

Latest high-clock CPUs do not align with the provider’s operational efficiency or inventory strategy, and therefore rarely appear as user-selectable options.

Latest high-clock CPUs do not align with the provider’s operational efficiency or inventory strategy, and therefore rarely appear as user-selectable options.

For Solana workloads, single-thread performance and cache structure are important, and differences in CPU generation affect:

- How many transactions can be processed

- How many streams can be handled without falling behind

- How fast data can be processed

2. Many virtualization layers and long network paths (higher network latency)

Large general-purpose clouds must run many different applications simultaneously on shared physical hardware.

To support this, multiple virtualization and internal networking layers are added.

To support this, multiple virtualization and internal networking layers are added.

Examples include:

- Hypervisors for running virtual machines

- Virtual NICs and switches

- Internal firewalls and load balancers

- Billing and monitoring agents

While necessary for cloud operations, from Solana’s perspective:

- Each one lengthens the network and processing path

- Each one introduces latency and jitter

For workloads that constantly handle streaming data such as Shreds or gRPC, these “additional waypoints” accumulate directly as disadvantages.

3. Overcommit creates unstable performance

Large clouds increase efficiency by running many virtual machines on one physical server.

For example, a server with a 64-core physical CPU may host many 8-core or 16-core VMs, adding up to far more than 64 virtual cores.

For example, a server with a 64-core physical CPU may host many 8-core or 16-core VMs, adding up to far more than 64 virtual cores.

This practice—assigning more virtual cores than physical cores—is overcommit.

The assumptions are:

- Not all VMs will use 100% of their CPU simultaneously

- CPU time can be borrowed between VMs depending on activity

For Web2 workloads, these assumptions are reasonably valid.

However, Solana workloads often include multiple processes that simultaneously require significant CPU.

On an overcommitted server, CPU contention occurs more frequently, and the OS must schedule tasks in a queue.

On an overcommitted server, CPU contention occurs more frequently, and the OS must schedule tasks in a queue.

Consequently:

- Benchmarks may look fast

- Actual latency in real workloads varies significantly depending on time of day and other tenants’ load

For Solana—where transaction timing and stream processing timing directly affect results—this jitter is a major disadvantage.

4. High data transfer volumes result in costly usage-based billing

Serious monitoring of Solana chain data frequently involves several terabytes of daily transfer through Shreds, logs, and gRPC events.

Large clouds charge separately for:

- Outbound network traffic

- Sometimes internal network traffic

- Storage I/O

In Web2 workloads, these charges are negligible because traffic volume is small.

But for Solana workloads, simply subscribing to streams can result in network charges of several hundred dollars per day, making continued operation impractical.

But for Solana workloads, simply subscribing to streams can result in network charges of several hundred dollars per day, making continued operation impractical.

Thus, large general-purpose clouds are structurally and economically misaligned with Solana workloads.

Why ERPC tested data centers around the world

Understanding these constraints, we needed to identify infrastructure that was actually suitable for Solana.

To do this, we rented data centers around the world and ran real Solana workloads to evaluate their behavior.

Even within the same city, suitability for Solana varies depending on:

- Building structure

- Rack position

- Internal cabling

- IXes and transit providers

- Network hardware performance and configuration

- ISP capacity and routing quality

- The amount and quality of physical fiber routes

- Bandwidth guarantees during congestion

Through repeated testing, we clearly identified:

- Locations that behave consistently and cooperatively for Solana

- Locations that do not, regardless of advertised specifications

We removed the latter and refined our choices again and again, eventually forming our current infrastructure and network architecture.

This accumulated knowledge directly supports the foundation of ERPC VPS and RPC infrastructure.

This accumulated knowledge directly supports the foundation of ERPC VPS and RPC infrastructure.

Why ERPC VPS delivers high performance

The following explains how ERPC VPS is designed structurally to support high-performance Solana workloads.

Removing unnecessary layers by focusing on Solana workloads

Large general-purpose clouds include many layers to support a wide variety of applications.

Most of these layers do not provide direct value for Solana and instead create latency.

Most of these layers do not provide direct value for Solana and instead create latency.

By focusing on Solana workloads, ERPC VPS removes:

- Layers unnecessary for Solana traffic

- Components present only for multi-purpose cloud operations

one by one, in a careful and controlled manner.

This is not “simplification for its own sake” but a design principle:

retain only what is meaningful for Solana and remove everything else.

retain only what is meaningful for Solana and remove everything else.

Latest-generation CPUs and ECC DDR5 memory

Large clouds generally do not expose latest-generation CPUs to users.

ERPC VPS adopts these CPUs and delivers configurations equivalent to those used in Solana RPC and Shredstream nodes.

ERPC VPS adopts these CPUs and delivers configurations equivalent to those used in Solana RPC and Shredstream nodes.

This avoids bottlenecks due to old CPU generations and provides a foundation capable of handling Solana’s indexing, trading logic, and real-time analytics.

No overcommit

Premium VPS never overcommits physical CPU cores.

Each allocated core is backed directly by a physical core.

Each allocated core is backed directly by a physical core.

This avoids:

- Performance varying depending on other tenants

- CPU contention under heavy load

Standard VPS also keeps overcommit rates extremely low to ensure stable CPU behavior.

CPUs operate at maximum turbo at all times

Many server environments dynamically adjust CPU frequency for power or thermal reasons.

For Solana workloads, however, such variability can cause performance dips at critical moments.

For Solana workloads, however, such variability can cause performance dips at critical moments.

ERPC VPS is tuned so that CPUs operate at consistently high clock speeds, minimizing downward fluctuation under load and ensuring performance stability.

Running on Solana’s key network hubs

ERPC VPS is not merely “located near our own infrastructure.”

It runs directly on the networks where Solana validators and stake are globally concentrated.

It runs directly on the networks where Solana validators and stake are globally concentrated.

Standard VPS is deployed on a network ranked second worldwide in validator count and stake.

Premium VPS runs on the network ranked first globally in both metrics, directly connected to a major hub where leaders and core validators converge.

Premium VPS runs on the network ranked first globally in both metrics, directly connected to a major hub where leaders and core validators converge.

Thus, ERPC VPS:

- Shares the same network as ERPC’s RPC, gRPC, and Shredstream infrastructure, and

- Operates on the very networks where validators and stake are most concentrated

This places workloads physically and logically closer to leaders.

As a result, even identical code and logic will exhibit structurally different performance when running on ERPC VPS compared to large general-purpose clouds—especially in leader-adjacent detection and transaction submission.

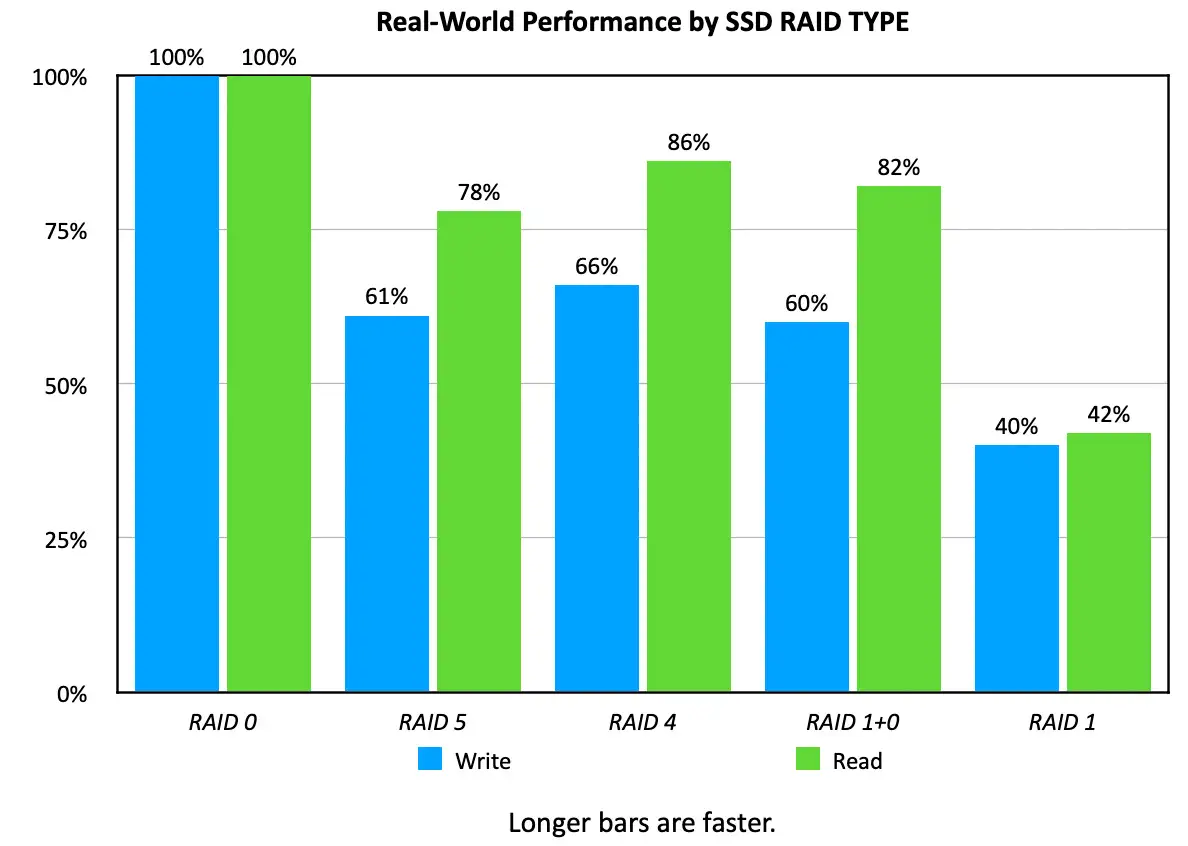

RAID0 storage configuration

Many cloud and VPS providers prioritize data protection and therefore use RAID10 or RAID4/5/6.

For Web2 systems where user data resides on the server, this is appropriate.

For Web2 systems where user data resides on the server, this is appropriate.

However, many Web3 applications and Solana nodes do not retain a single irreplaceable piece of data at the application layer.

The blockchain itself serves as a distributed ledger, making resynchronization or rebuilding possible.

The blockchain itself serves as a distributed ledger, making resynchronization or rebuilding possible.

Many users also prefer performance over mirroring, and storage I/O performance directly affects Solana node behavior.

For these reasons, ERPC VPS uses RAID0 to maximize I/O throughput.

For these reasons, ERPC VPS uses RAID0 to maximize I/O throughput.

In Web3 infrastructure, choosing where to place redundancy and at which layer is essential for balancing performance and safety.

Reference: Real-World Speed Tests for Different SSD RAID Levels

https://larryjordan.com/articles/real-world-speed-results-for-different-raid-levels/

https://larryjordan.com/articles/real-world-speed-results-for-different-raid-levels/

Conclusion

There is no single factor that explains the performance of ERPC VPS.

CPU generation, overcommit policy, eliminating power-saving constraints and running at maximum turbo, data center selection, network paths, RAID configuration, and how far unnecessary layers are removed for Solana workloads— each of these factors may appear small on its own, but when each one is thoroughly refined, the cumulative effect becomes the performance ERPC VPS delivers today.

Through these efforts, we have built an infrastructure fundamentally different from large, general-purpose clouds—an infrastructure specialized for Web3 and blockchain workloads.

For Solana, this structural difference translates directly into meaningful performance advantages.

For Solana, this structural difference translates directly into meaningful performance advantages.

For configuration inquiries, use-case discussions, or deployment planning, please feel free to contact us via the Validators DAO Discord.

- ERPC Official Website: https://erpc.global/

- Validators DAO Official Discord: https://discord.gg/C7ZQSrCkYR